17. UNIT 9. Neural Networks

This Unit includes an introduction to neural networks, strongly based in [1].

17.1. A very simple first example of NN

adapted from here.

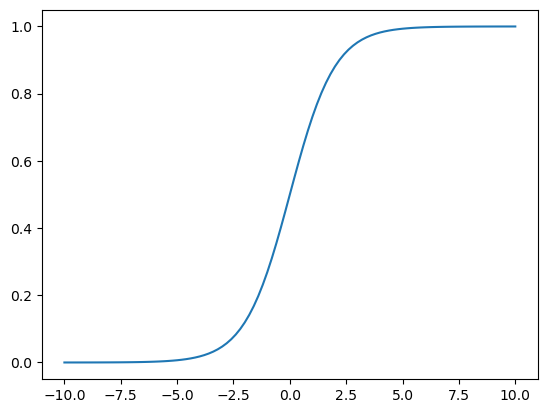

let us start by looking at the shape of a sigmoid function, that we will use later for the final layer of the classification NN

[<matplotlib.lines.Line2D at 0x1150f9450>]

In the following code, we have defined a first layer which simply contains weights for each of the feature values and we multiply both vectors with a dot product.

The second layer is a sigmoid function that decides if we choose a 0 or 1 as a result of the network.

The input data and the correct output are given here:

item |

feature 1 |

feature 2 |

ouput |

|---|

1 |

1.66 |

1.56 |

1 |

2 |

2.00 |

1.5 |

0 |

and we will use as weights the vector \((1.45,-0.66)\) to predict the output of the two data points, plus a sigmoid function to classify in category 0 or 1.

The prediction result is: [0.7985731]

the result was good, but for the second data point our prediction will be wrong

The prediction result is: [0.87101915]

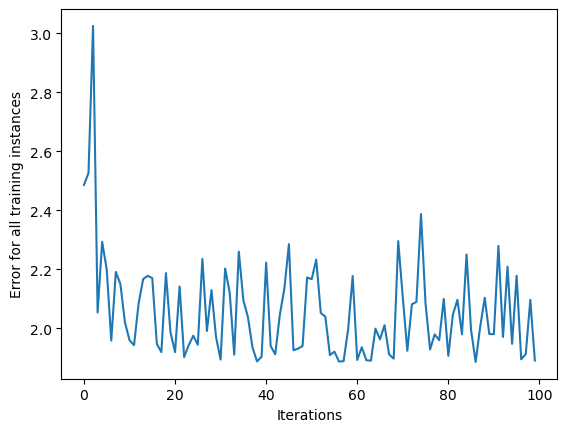

so… we need to train our network. In the process of training the neural network, we will first assess the error and then adjust the weights accordingly. To adjust the weights, we use the gradient descent and backpropagation algorithms. Gradient descent is applied to find the direction and the rate to update the parameters. The algorithm needs to be applied to a given function.

First, we measure the mean square error (MSE)

Prediction: [0.87101915]; Error: [0.75867436]

minimal gradient descent:

The derivative is [1.7420383]

The old weights are [ 1.45 -0.66]

The new weights are [-0.2920383 -2.4020383]

Prediction: [0.01496248]; Error: [0.00022388]